Splunk Infraestructure

Splunk Components

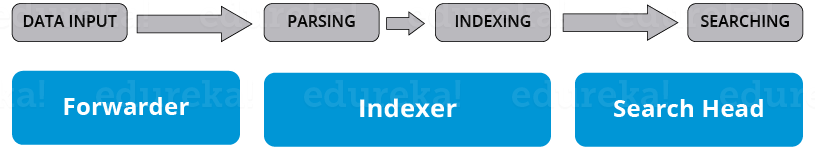

If you look at the below image, you will understand the different data pipeline stages under which various Splunk components fall under.

There are 3 main components in Splunk:

- Splunk Forwarder, used for data forwarding

- Splunk Indexer, used for Parsing and Indexing the data

- Search Head, is a GUI used for searching, analyzing and reporting

As the Splunk instance indexes your data, it creates a number of files. These files contain one of the below:

- Raw data in compressed form

- Indexes that point to raw data (index files, also referred to as tsidx files), plus some metadata files

These files reside in sets of directories called buckets.

Splunk processes the incoming data to enable fast search and analysis. It enhances the data in various ways like:

- Separating the data stream into individual, searchable events

- Creating or identifying timestamps

- Extracting fields such as host, source, and sourcetype

- Performing user-defined actions on the incoming data, such as identifying custom fields, masking sensitive data, writing new or modified keys, applying breaking rules for multi-line events, filtering unwanted events, and routing events to specified indexes or servers

This indexing process is also known as event processing.

- You can receive data from various network ports by running scripts for automating data forwarding

- You can monitor the files coming in and detect the changes in real time

- The forwarder has the capability to intelligently route the data, clone the data and do load balancing on that data before it reaches the indexer. Cloning is done to create multiple copies of an event right at the data source where as load balancing is done so that even if one instance fails, the data can be forwarded to another instance which is hosting the indexer

- As I mentioned earlier, the deployment server is used for managing the entire deployment, configurations and policies

- When this data is received, it is stored in an Indexer. The indexer is then broken down into different logical data stores and at each data store you can set permissions which will control what each user views, accesses and uses

- Once the data is in, you can search the indexed data and also distribute searches to other search peers and the results will merged and sent back to the Search head

- Apart from that, you can also do scheduled searches and create alerts, which will be triggered when certain conditions match saved searches

- You can use saved searches to create reports and make analysis by using Visualization dashboards

- You can use Knowledge objects to enrich the existing unstructured data

- Search heads and Knowledge objects can be accessed from a Splunk CLI or a Splunk Web Interface. This communication happens over a REST API connection

Splunk Infraestructure

Reviewed by ohhhvictor

on

May 10, 2020

Rating:

Reviewed by ohhhvictor

on

May 10, 2020

Rating:

Reviewed by ohhhvictor

on

May 10, 2020

Rating:

Reviewed by ohhhvictor

on

May 10, 2020

Rating:

No comments: